November 4th, 2024

AI Chips GPU

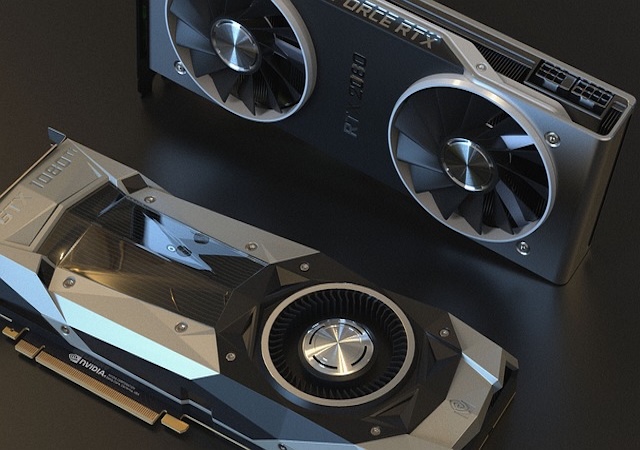

The rise of generative AI, large language models (LLMs), and powerful AI-driven software has led to an increasing demand for consumer-grade GPUs that can handle the complex computations required to run these models locally. Whether for small businesses, developers, or hobbyists looking to experiment with AI, having the right GPU can make a significant difference in performance, efficiency, and cost. Here’s an overview of the top GPUs on the market in 2025 for running local LLMs and AI software.

Key Considerations for Choosing a GPU for Local AI Workloads

Before diving into the top GPU options, it’s essential to understand what makes a GPU suitable for running AI models locally:

1. VRAM (Video RAM): Large language models and AI applications require high memory capacity. More VRAM means the ability to load and process larger models without needing to offload memory-intensive tasks, which significantly improves performance.

2. CUDA Cores and Tensor Cores: NVIDIA GPUs, in particular, are popular for AI tasks due to their CUDA and Tensor cores, which allow for faster matrix and tensor computations. Tensor cores are specifically optimized for deep learning operations.

3. FP16/BF16 and INT8 Support: Modern AI models benefit from half-precision floating points (FP16, BF16) and integer (INT8) computations, which allow GPUs to handle AI tasks faster and more efficiently while conserving memory and power.

4. Software Compatibility: Some GPUs work better with specific AI software stacks, such as PyTorch, TensorFlow, and CUDA libraries. Compatibility with these libraries can enhance the model training and inference process.

5. Power Efficiency and Cooling: With GPUs consuming significant power and generating heat, cooling solutions and power efficiency are crucial, especially if you’re running prolonged training sessions on LLMs.

Top GPUs for Local LLMs and AI

Here’s a rundown of some of the best consumer-grade GPUs available, balancing performance, VRAM, power efficiency, and price for AI enthusiasts and developers.

1. NVIDIA GeForce RTX 5090

Specs Highlights:

• VRAM: 24GB GDDR7

• CUDA Cores: 22,000+

• Tensor Cores: 7th Gen (optimized for AI and DLSS)

• Price: $1,800 - $2,000

NVIDIA’s flagship GeForce RTX 5090 offers substantial power for AI workloads. With 24GB of GDDR7 VRAM, the RTX 5090 can handle many medium-to-large LLMs and complex AI tasks, making it ideal for those looking to run inference or fine-tune smaller models on a consumer-grade card. The high number of CUDA cores and Tensor cores makes it extremely effective for deep learning, and NVIDIA’s strong support for CUDA libraries means seamless compatibility with most AI software.

The RTX 5090 also introduces advanced cooling and power-saving technologies, making it a good choice for extended training sessions. However, the high price point may put it out of reach for casual users.

2. NVIDIA RTX A6000

Specs Highlights:

• VRAM: 48GB GDDR6

• CUDA Cores: 10,752

• Tensor Cores: 3rd Gen

• Price: $3,500 - $4,000

While technically a workstation card, the updated RTX A6000 is highly regarded for consumer AI use. With a massive 48GB of VRAM, it’s an excellent choice for running large LLMs like LLaMA-2-70B or even more extensive fine-tuning tasks that require high memory capacity. The A6000’s impressive memory bandwidth and large core count make it a favorite among AI researchers and professionals looking for top-tier performance without the need for enterprise GPUs.

However, it’s worth noting that the A6000’s price is steep, and it’s best suited for serious AI developers and small labs that require this level of VRAM.

3. AMD Radeon RX 8950 XT

Specs Highlights:

• VRAM: 32GB GDDR7

• Compute Units: 8,800+

• AI Acceleration: RDNA 4 Enhanced Matrix Operations

• Price: $1,200 - $1,500

AMD’s RX 8950 XT has emerged as a powerful and cost-effective competitor to NVIDIA’s high-end GPUs. With 32GB of VRAM, it offers ample memory for running large models without needing to offload data constantly. AMD has also made strides in AI processing with RDNA 4, adding enhanced support for matrix operations and half-precision compute, making the RX 8950 XT highly capable for AI applications.

While AMD’s GPU ecosystem still lacks the depth of AI-specific libraries like CUDA, it’s improving. Developers who prioritize VRAM and price-to-performance ratio may find the RX 8950 XT a compelling choice.

4. NVIDIA GeForce RTX 5080

Specs Highlights:

• VRAM: 20GB GDDR7

• CUDA Cores: 17,000+

• Tensor Cores: 7th Gen

• Price: $1,000 - $1,200

For those looking for a balance between performance and price, the RTX 5080 provides substantial capabilities at a lower cost than the 5090. With 20GB of VRAM, it can run small-to-medium-sized models efficiently, making it an excellent choice for developers, small businesses, or AI enthusiasts who need reliable performance without the highest price tag.

The RTX 5080 benefits from NVIDIA’s extensive software ecosystem and CUDA support, making it suitable for a wide range of AI tasks, from natural language processing to computer vision.

5. Intel Arc Pro A80

Specs Highlights:

• VRAM: 24GB GDDR6

• AI Matrix Engines: Optimized for INT8

• Price: $900 - $1,100

Intel’s Arc Pro A80 is an intriguing option for budget-conscious AI developers. With 24GB of VRAM and optimized support for INT8 computations, it provides reasonable performance for smaller AI workloads and inference tasks. While not as powerful as the high-end NVIDIA and AMD offerings, the Arc Pro A80 is a solid choice for those working with smaller models or less memory-intensive AI applications.

Intel has been improving its support for AI frameworks, making the Arc Pro A80 a viable option for those looking to experiment with AI without investing heavily in top-tier hardware.

Best Choice by Use Case

• For Professionals and Researchers Running Large Models: NVIDIA RTX A6000 or NVIDIA GeForce RTX 5090 are optimal due to their high VRAM and extensive CUDA support.

• For Developers Seeking High Performance without Breaking the Bank: NVIDIA GeForce RTX 5080 provides great performance for the price, ideal for versatile AI workloads.

• For Budget AI Enthusiasts: Intel Arc Pro A80 is a cost-effective choice for those experimenting with smaller models and inference tasks.

• For Memory-Intensive Applications at a Mid-Tier Price: AMD Radeon RX 8950 XT offers a high VRAM-to-price ratio, suitable for users needing more than 20GB of VRAM.

Conclusion

As AI technology becomes more accessible, the demand for high-performance consumer GPUs is growing rapidly. The GPUs listed above offer some of the best options for running LLMs and other AI software locally. Depending on budget, application needs, and model size, users have a range of choices from affordable options to high-end GPUs that approach professional-grade performance.

Investing in the right GPU can empower developers and researchers to experiment with cutting-edge AI tools locally, making it easier to prototype, test, and deploy models without relying on costly cloud infrastructure. With the advancements in GPU technology in 2025, running AI locally is more feasible and efficient than ever.